Blog

All Things AI .

Categories: AI in Schools - AI in Government - Train for AI - AI Consulting Services - Generative AI - AI Literacy - AI Ethics

Why “Efficiency Only” Leaders Will Miss the Future of Work

As a woman who built a 40‑year tech career without a four‑year degree, I’m part of the 60% of U.S. workers economists like Simon Johnson warn are most at risk in the age of AI. Let’s explore why earlier tech waves opened doors for people like me, how today’s AI boom could slam them shut, and what it would take (AI literacy, durable skills, and courageous governance) to keep opportunity open for all.

From clueless to clued-in: 38 days immersed in AI governance

It’s been 38 days since I joined AI Governance Group (AIGG). In that short span I’ve begun to shift how I see AI from a shiny (and sometimes scary) efficiency tool to a governance, trust, and human-impact undertaking.

Federal Funding Opportunity: $50M for AI in Education

Most institutions racing to submit FIPSE grant proposals will focus entirely on their innovative AI tools and transformative outcomes. They'll describe cutting-edge technology, ambitious goals, and measurable student impacts. And most of them will miss what reviewers are actually looking for. We can help with a 30-minute readiness assessment call.

Ghosts in the Machine: Real Scares from the AI Front

AI may be the productivity potion of our time, but left unchecked, it’s also summoning real-world horror stories. Here are four of 2025’s spookiest AI tales—every one true—and how smart governance can keep you from becoming the next cautionary headline.

October 2025 Brand Brief – “When AI Becomes Your Brand: Trust, Governance & Experience”

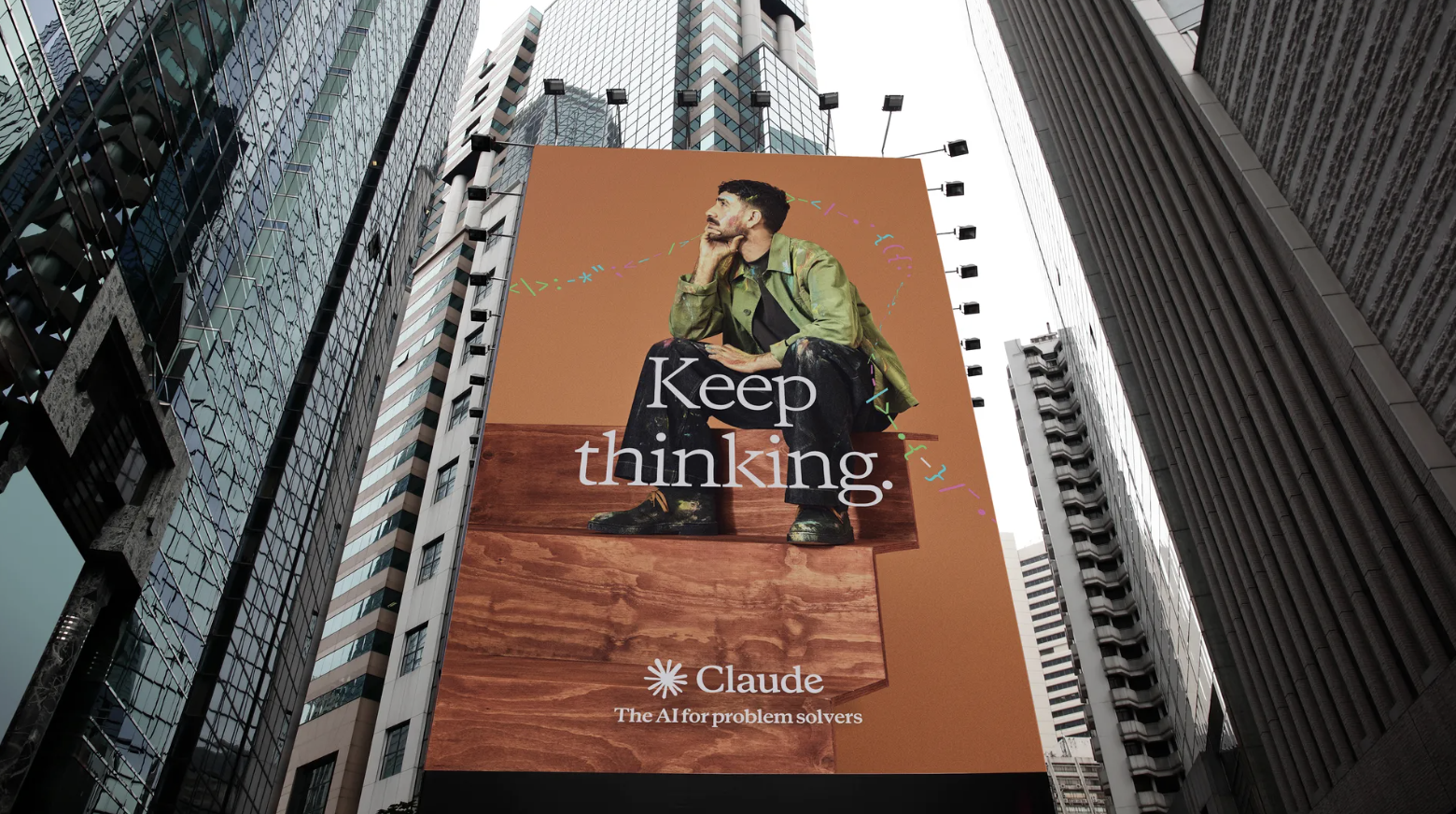

As brands increasingly embed AI not just in products but in identity, experience and messaging, the direct link between AI governance and brand trust is becoming unavoidable. This month, we’ve seen three converging currents: experience-driven branding of AI; enterprise adoption raising brand risk; and the shift from principle to proof in governance.

When Growth Is More Important Than Safeguards: The Real Cost for Youth in the AI Era

In the rush to monetize generative AI tools, something critical is being sidelined: the welfare of young people. When Sam Altman announced a new direction for OpenAI’s next-generation system — ChatGPT‑5 (and the related move toward more “friend-like” and adult-oriented chat experiences) — the company claimed we now have “better tools” to make these experiences safe. But as someone who works daily with students and educators navigating the realities of AI in the classroom and at home, I’m profoundly unconvinced. My issue isn’t porn, or whether someone dresses up the feature as just “adult content.” My issue is that we haven’t really protected our young people yet, nor have we sufficiently educated broad populations about how GenAI works, what information it collects, and how it shapes relationships, emotions, and behaviors.

Shadow AI is Already Here. Why Your Insurance Likely Won’t Cover What’s Next

Your vendors are adding AI capabilities to software you already own, often without meaningful notification or your consent. Two-thirds of SaaS applications now have AI features. Your legal team reviewed the original contract three years ago. But did anyone review last quarter's terms of service update? Probably not.

Request for Collaboration: Help Shape the AI & Data Safety Shield for Schools

Across the country, school leaders are navigating a growing paradox: AI is becoming part of classrooms, communications, and district operations — but the systems that keep students safe haven’t caught up. As part of my work in the EdSAFE AI Catalyst Fellowship, I’m studying this challenge through a research project called the AI & Data Safety Shield for Schools. The EdSAFE AI Catalyst Fellowship is a national program that supports applied research and innovation to advance ethical, transparent, and safe AI in education. Each Fellow explores a Problem of Practice—a real-world challenge that, if solved, could help schools use AI responsibly and equitably.

Human Dimensions of AI Literacy: Why Teaching Tech Misses the Point

The job market has fundamentally transformed. We’re preparing a workforce for jobs that don’t yet exist. Skills that took years to master are being automated, while entirely new capabilities - from prompt engineering to AI ethics - are suddenly the latest discussion points. This isn't about robots taking jobs; it's about understanding which human skills matter more than ever, and which ones won't save you.

Building the Future of Education: My Journey into Durable Skills

Attended a Lightcast workshop on durable skills for the AI age. Then I actually tried to GET the data. What followed was a perfect lesson in the very skills we should be teaching: problem-solving through failure, asking better questions, and collaborating with AI as a learning partner.

The irony? Learning about AI-age skills BY using AI to learn. Sometimes the process teaches more than the product.

Escaping Local Maxima: What Grief, High Jumping, and AI Taught Me About Transformation

A year after loss, I'm still finding my way forward. Grief taught me what AI is now teaching workplaces: sometimes progress means going backward first. Like Dick Fosbury revolutionizing high jumping by looking foolish, we must escape our comfortable valleys to discover what's possible on higher ground.

September 2025 Brand Brief – “Why Trust and Governance Now Define AI-Powered Brands”

As AI becomes ever more central to brand identity, the stakes for transparency, ethics, and trust are rising just as fast. This month, we’ve seen brands and regulators pushing to ensure AI doesn’t just deliver innovation—but also responsibility. Below are the biggest developments across trust frameworks, governance, and brand positioning with generative and agentic AI.

3 Ways AI Governance Actually Speeds You Up (Not Slows You Down)

Budget Season is upon us. If you’re sitting down with 2026 numbers, you already know the pressure:

Cut costs where you can.

Find growth where you must.

Show the board a clear return on every line item.

Here’s the mistake too many teams will make in next year’s budgets: They’ll throw money at AI pilots or vendor contracts without investing in governance.It feels faster in the short term. But it costs more in the long run.Here’s why governance is not just risk management — it’s the thing that actually makes AI adoption faster, safer, and budget-friendly.

Patterns in time, minds in motion

The O’Mind helps forward‑thinking leaders systematize knowledge, capture IP, and integrate human and AI‑powered processes, creating a living organizational intelligence that can be used to boost productivity, accelerate onboarding, or prepare for successful M&A.

The countdown has begun

On August 2, 2026, organizations who sell into the EU or whose AI-enabled products or services are used in the EU must prove they proide AI Literacy education and training to their employees. Fines for those who don’t will follow.

America’s AI Action Plan: What’s in It, Why It Matters, and Where the Risks Are

This article sets out to inform the reader about the AI Action Plan without opinion or hype. Let’s dig in: On 23 July 2025, the White House released Winning the Race: America’s AI Action Plan, a 28-page roadmap with more than 90 federal actions grouped under three pillars: Accelerate AI Innovation, Build American AI Infrastructure, and Lead in International AI Diplomacy & Security. The plan rescinds the 2023 AI Bill of Rights, rewrites pieces of the NIST AI Risk-Management Framework, and leans on January’s Executive Order 14179 (“Removing Barriers to American Leadership in AI”). If fully funded and executed, the plan would reshape everything from K-12 procurement rules to the way cities permit data-center construction.

AI Literacy and AI Readiness - the intersection matters most

As leaders consider how to adopt and scale new AI-enabled solutions, we’re hearing two phrases increasingly surface in strategic conversations: AI literacy and AI readiness. They are related, but not interchangeable, and understanding the distinction (and the magic of the intersection of the two) could determine whether your organization is poised to thrive in an AI-driven landscape.

July 2025 Brand Brief – AI in EdTech: “When Governance Gaps Become Brand Risks”

Each month, we spotlight real-world challenges that software creators face when trying to align with school district expectations, data privacy laws, and public trust—because in today’s market, your AI doesn’t just need to work, it needs to pass inspection.

The First AI Incident in Your Organization Won’t Be a Big One. That’s the Problem.

Your first AI incident won’t be big. But it will be revealing. It will expose the cracks in your processes, the ambiguity in your policies, and the reality of how your team uses AI. If you wait for a significant event before acting, you’ll already be behind. Building responsible AI systems doesn’t start with compliance. It begins with clarity and a willingness to take the first step before the incident occurs.

“HIPAA doesn’t apply to public schools.” That statement is technically correct, and dangerously misleading.

For years, the education sector has operated on the belief that FERPA (Family Educational Rights and Privacy Act) is the only law that matters when it comes to student data. And for much of the traditional classroom environment, that’s true. But the moment health-related services intersect with educational technology—whether through telehealth platforms, mental health apps, or digital IEP tools the ground shifts. Suddenly, the boundary between FERPA and HIPAA isn’t just academic. It’s operational, legal, and reputational.